What I learned after Meta's algorithms silenced me, and then Adam Mosseri listened

Good morning from sunny Washington, where spring is now accelerating into a climate that resembles of New England summer, the Senate is back in session, and I'm getting ready to go up to Maine to sing in an acappella reunion again. Deja vue, all over again.

Thanks to everyone who has subscribed so far, particularly the folks who have trusted me with a paid subscription. If you find these newsletters valuable, please share them with your friends, family, and colleagues over email, text, and social media. I hope those of you who haven't will consider upgrading.

I have literally dozens of ideas for future newsletters. Today I'm going to share what happened to me over the past week and weekend, with an eye on what my experience does – or does not – mean for billions of people around the world.

As folks who follow me on social media already know, I have had several surreal Internet moment over the past week days.

This past weekend, I had the joy of being adjacent to the "Main Character" on Bluesky, when Adam Mosseri answered my question about why Meta was blocking links to the Kansas Reflector.

I was surprised when the head of Instagram replied to me, stating that the issue "looks like…a few domains, including thehandbasket.co, were mistakenly classified as a phishing site, which has since been corrected as Andy mentioned. Unfortunately, at our scale, we get false positives on safety measures all the time. Apologies for the trouble."

And then for 24 hours, I watched anger, outrage, and vitriol in my mentions, mostly directed at him and Bluesky's trust and safety lead, who drew oppobrium for thanking Mosseri for sharing his perspective and agreeing that the issue "definitely looked like a false positive classifier problem on my end based on the user messaging."

When I asked Mosseri follow up questions about what an acceptable error rate in moderation or false positive rate in flagging spam or manipulation is, how Meta balances moderation at scale against retaining consumer trust, and if they whitelist creators, he answered that, too, stating that:

"We aim to minimize prevalence of problems, ideally to a level too low to be able to measure, while minimizing false positives. We don’t except large accounts, but we do offer appeals when we take content and accounts down so we can correct mistakes."

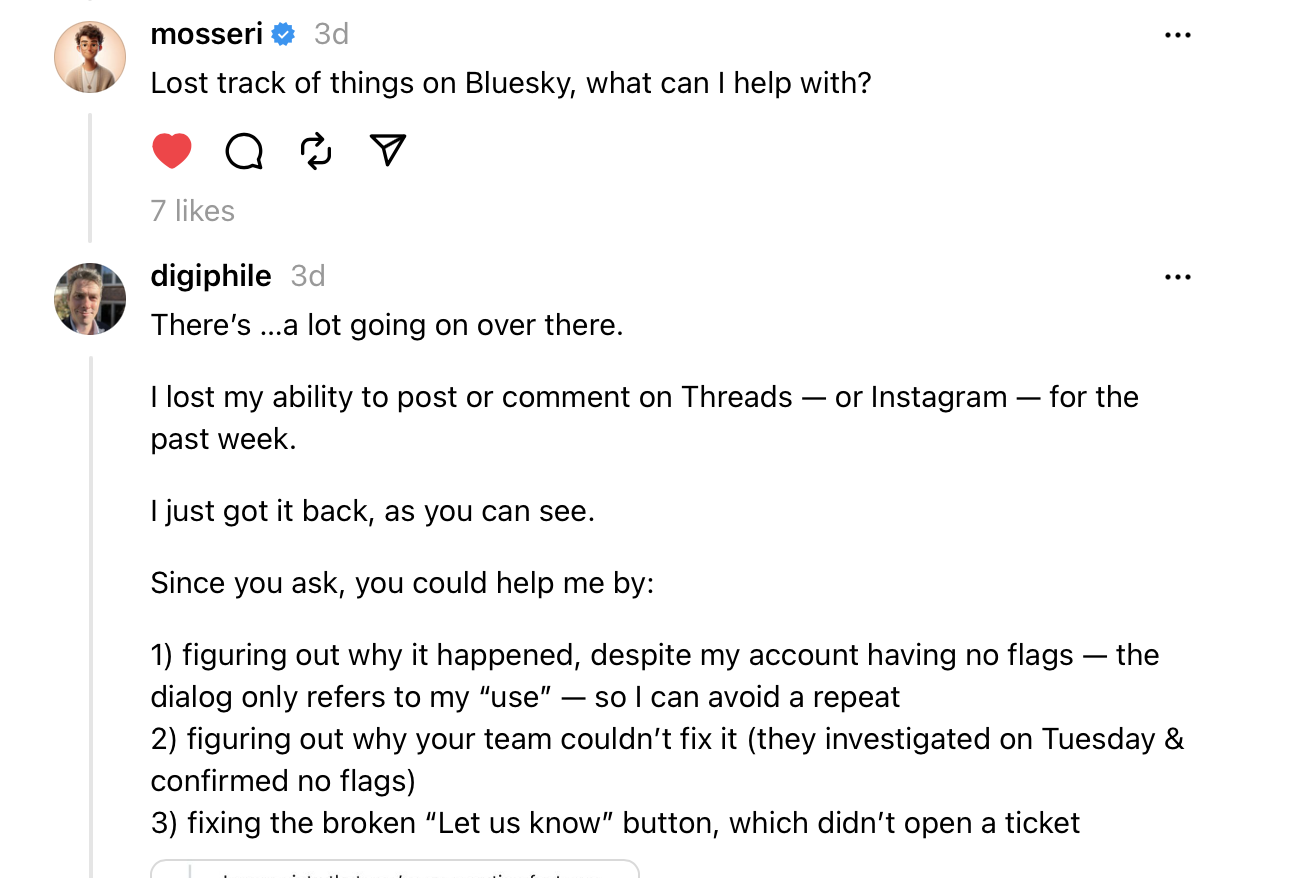

Selfishly, I hoped that this moment of his attention would lead to restored access to me posting on Threads. But on Sunday morning, I still couldn't post or comment.

Then, on Sunday afternoon, something unexpected happened.

After I regained the ability to post and comment on Threads, Adam Mosseri followed up, offered to help, and then direct messaged me on Instagram with his email.

I sent him three questions, which he forwarded to Meta support:

1) Why did this happen, despite my account having no flags — the dialog only refers to my “use” — so I can understand, avoid a repeat, & tell others what happened

2) Why couldn't Meta's team fix it – they investigated on Tuesday & confirmed no flags

3) When will Meta fix the broken “Let us know” button in this dialogue? It doesn't connect to an appeal process, open a ticket, or link to a FAQ.

If I hear back, I'll write a follow up.

Honestly, this experience seems akin to getting help with Gmail from Sundar Pichai or support on Twitter from Jack Dorsey. (I suspect Elon Musk DMing me about a moderation problem and bug with an appeal process would feel different. Once upon a time, a Twitter CEO replied to me about reporting issues, but that was a long time ago, in a galaxy far, far away.)

As a result of asking for help, I've received more support from Meta staff about Meta software problems after I posted on a Meta platform and then corresponded with a Meta executive about his Meta product.

It’s all a bit… meta!

I was actually heard, after a week being silenced and ignored.

But I still don't know why my Threads account was limited.

The dialogue only referred to "use." The Meta staff I'd contacted on Tuesday didn't see any flags on the account, but didn't come up with an explanation or restore my ability to post.

Four days later, I also haven't gotten any answers about the broken button.

This will all be worth if it leads to getting an opaque moderation process and janky appeals button fixed for tens of millions of people.

I don't know how optimistic to be about that, to be honest, but it beats being muted without explanation with a broken dialog button.

Despite Mosseri's help to me, this is also a reminder that the corporation's customers and clients are the advertisers who generate tens of billions of dollars in profits, not the people who post on those platforms.

What he did is not remotely scalable. I understand why Meta is turning to AI to moderate content on platforms with billions of consumers, but doing so is shifting the administrative burden to us.

Two decades into the modern social media era, the corporations and executives who operate them have still not adopted the Santa Clara Principles, which would bring much-needed accountability and transparency to content moderation.

Given how contested the decisions to limit, label, take down, suspend, and ban content or accounts have become after a historic pandemic and incitement of off-line violence with online hatred, it's critical that Mosseri, Mark Zuckerberg, and everyone else at Meta start investing much more in a transparent and accountable moderation process.