What danah boyd told us about AI and ethics

Good morning from Washington, where the Senate dismissed impeachment charges against DHS Secretary Mayorkas on a party-line vote, the House will consider foreign aid to Ukraine, Taiwan, and Israel, privacy legislation seems to be moving forward, and NPR staffers. (Jury selection is ongoing in an "unpresidented" criminal trial in New York City, if you've somehow missed th news.)

Alex Howard here, back in your inbox with another edition of Civic Texts. I'm continuing to think through the best pace, frequency, and formats for these newsletter, so stay tuned. If you have suggestions, questions, comments, tips, or concerns, you can find me online as @digiphile across social media, or email alex@governing.digital. If you find these newsletters valuable, I hope you'll consider sharing them with your social networks, forwarding to colleagues, and upgrading to a paid subscription to support my work. Thank you for your continued support!

Who will be in AI's "Moral Crumple Zones" ?

Last week, I cycled back over at Georgetown to see danah boyd give a talk at the university's newish Center for Digital Ethics in anticipation of her making some sense of what’s happening with artificial intelligence right now and she anticipates unfolding all around us in the year to come. It was marvelous to see her again after so many years and enjoy her perspective.

As usual, danah gave me a LOT to think about in a chewy talk. Georgetown may post video or slides. I'm hopeful that she'll publish her remarks as prepared for delivery on her blog. In the interim, I've summarized what I heard using my notes and hope it will prove useful.

Her core lesson to us was an exhortation to think "sociotechnically," and avoid determinism or techno-solutionism. As a trained sociologist and recovering technology journalist, this is both welcome and strikes right next to the bone, as it has for a decade.

As she noted to a hall full of Washingtonians, policymaking is rife with solutionism — using legislation or regulation to solve a societal problem. It's also deeply embedded in the logic and code of Silicon Valley, where techno-solutionism – the idea that the right technology can solve a societal problem – has been scaling for years. Deja vu, all over again.

danah gave us an important concept to consider for the rapidly expanding, emergent uses of AI, using the ways the USA regulates air travel as a useful prompt. When the ability to make decisions has shifted to a private industry without accountability, beware.

In airplanes, humans must remain in the loop, but sometimes the automated systems can get in their way, as with Boeing and the Max 8 disaster – long before the current rash of problems with the aerospace giant. danah noted that when it comes to Captain "Sully" and the Miracle on the Hudson, he has to make objections to save people’s lives.

Citing Madeline Elish, she talked about "moral crumple zones," citing Madeleine Elish's evocative concept that describe "how responsibility for an action may be misattributed to a human actor who had limited control over the behavior of an automated or autonomous system."

When there's no ability for pilots to override systems, it results in crashes. danah says the pattern is consistent: people would have been saved if pilots could override a technical system that wasn’t up to snuff. She's concerned that we currently don’t have structures in place to hold tech companies accountable over time for similar issues.

The question of who gets to define acceptable outcomes in policy, programs, and services is always about power and whose values are reflected in them.

)This is why I've spent years advocating for liberal democratic values to be embedded in American tech companies code and governance, lest authoritarian values from alternative systems of government become the defaults. Privacy-enhancing technologies are great. Coercive theofascism, not so much.)

danah gave us a mundane but profound example of this dynamic: scheduling software. This is the software anyone who schedules workers for shifts uses. As she observed, the companies who make such software can optimize for many different things.

The question is whose values are centered. If the goal is staff happiness, what would you look for? Might that mean regular shifts, near home, with people they want?

But this software is sold to people who have power, not workers. What if an employer wants to ensure no that one hits full time work to avoid paying benefits? Or to avoid staff having the same shifts, to avoid unionizing? Or to keep shifts irregular, so staff can't get other work, ensuring an on-demand, contingent labor pool?

These kinds of systems have knock-on effects, as boyd noted, citing research that found the children of parents in these kinds of jobs are a grade-level behind.

It's not that applying technology to improve wicked societal problems in inherently wrong, though. boyd cited Crisis Text Line, where she was a founding board member, of a successful attempt at making mental health software that serves people in need, along with those seeking to help.

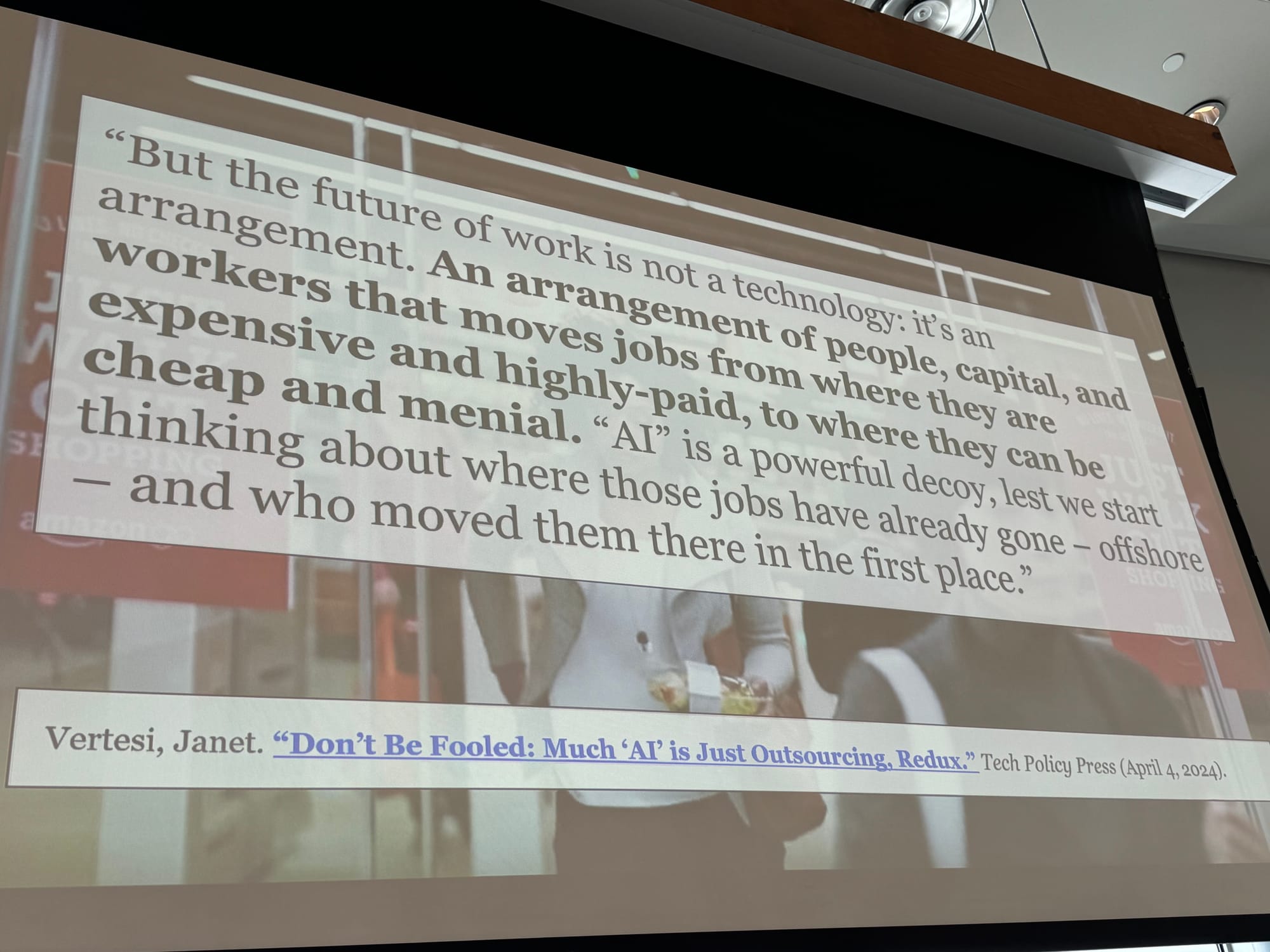

The better questions is that if we use tech, to what ends? As boyd said, using the science and technology studies, arrangements matter. There are the economic and political conditions that technology is built and experienced within, which shape both the development and uses of technology and our expectations around it.

boyd says in the current framing around generative AI, everything is about efficiency and productivity, not making work more joyous. It's about minimizing cost – and eliminating humans, which has been a vision of capitalism for over a century.

And here danah gave us something lovely to chew on: whether we've been thinking about Luddites and Luddism the wrong way. Luddism was a movement in 1810-1817, when new manufacturing technologies were changing entire areas of the United Kingdom. Before, danah said, tech empowered workers. Then automated looms in textile manufacturing took power away and add increased risks of injury.

People were angry at machines and attacked them, which led to murder by soldiers and then Luddites hung in town squares. Criminalization of attacking industrial machines and backlash ended that movement, but Luddism endures, said boyd.

The Luddites introduced the questions of what workers rights should be, which then permeated pop culture of the time. Shelley's "Frankenstein" is a story in a response: it's not about if you can build tech, but if it will fit into society. Literature moved ideas forward.

Many modern arrangements look more like outsourcing than automation. More recently Amazon's amazing "just walk out tech" turns out to depend on many humans watching humans – a classic mechanical Turk. What's now at hand could be a rearrangement of workers and work, not replacement, per se. Within these new systems of software-mediated employment, new inequalities can emerge.

boyd explained why is fascinated by generative AI, due to its implications for the arrangements across society. We are now seeing trust degrade in organizational settings, with awareness of who is gifting others a sense of time. It depends on arrangement or power! There are amazing success stories, like people who speak English as a second language having a system to check their writing, or using the tool to write and rewrite difficult, complex emotional messages to get it right. There's also humor, where poor results lead groups to find solidarity in collective hatred of AI tools.

danah focused us on being cautious about designing models and applying them without thinking about the broader social system and how to make it collectively beneficial, using the example of a tool designed to detect when sepsis is likely at Duke that took for granted that nurses would constantly provide feedback in the system when they had no time or incentive to do so.

People seeking to apply AI and automation need to take into account social impact and include repair costs downstream of changes in arrangements or

Finally, danah called on all of us to attend to externalities and be responsible for the future, as with tracking down the environmental costs of the energy demands and cooling needs of the data centers that power generative AI. As she notes, citing recent investigative journalism, there's no question that the increasing strains on energy grid and the environmental costs are significant. As electric grids reach capacity and the water situation becomes more serious, the externalities won't be theoretical to communities, as opposed to the hazy metaphor of “the cloud."

On warming planet, she challenged us, how do we think about the ethics of chewing up the resources required for computing? How do we include environmental factors, or consider costs of maintenance and repair?

If we think about our political and economic environment, and take a sociotechnical approach, we can see some tools are fun, and some are useful. What matters is how these things are configured, and by whom.

We need to look beyond hype, as with the folks who said AI will end humanity in 2023 or reclaim AI more important than electricity or fire, and invite some form of resistance — friction – to widespread development.

These are not inevitable outcomes. They are choices. We need nuanced conversation, to avoid determinism, a learn from history, focus on arrangements that are are robust & sensitive, and remember we all have agency to help create the future

When I asked her about what states and nations should do, citing the example of the imposition of the catalytic converter to address smog and acid rain, danah said the right frame is interventions.

We aren't going to fix OpenAI by going right at them. Instead, make the economic cost of it egregious, so they have to pay environmental costs up front.

Much to chew through.

It's an interesting provocation! As always, feel free to let me know what you think about it at alex@governing.digital.