On prebunking, community facts, TikTok’s last dance, and President Carter

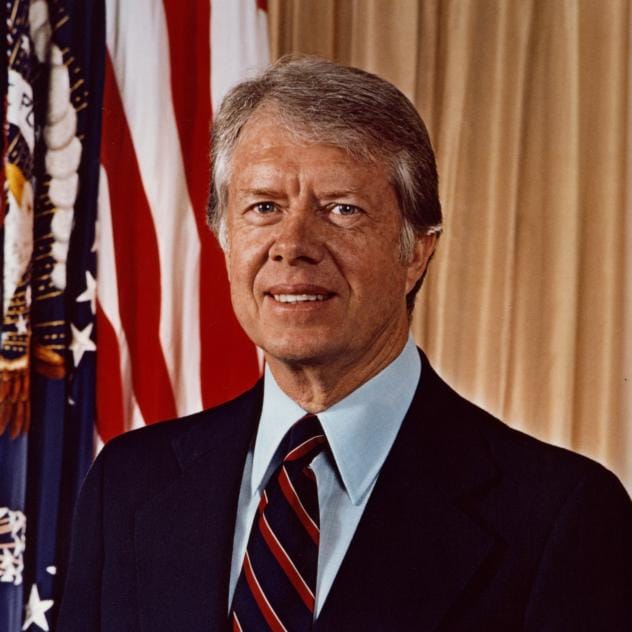

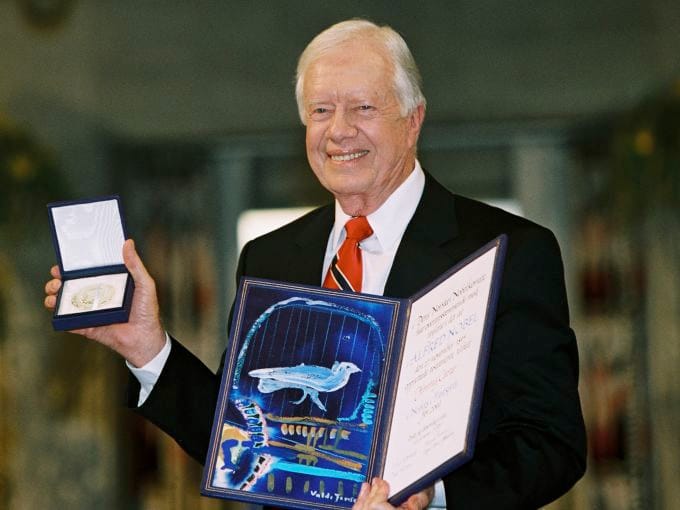

Good afternoon from Washington, where I’m adjusting to a nearly 60 degree temperature swing from upstate New York and reflecting on the extraordinary life of Jimmy Carter, the 39th President of the United States, who died yesterday at age 100.

Thank you to everyone who’s subscribed to Civic Texts this year. I hope you and yours are well in this holiday season! If you can, please consider upgrading to a paid membership or providing direct support.

RIP, President Carter

Peacemaker, patriot, peanut farmer, Carter redefined the presidency, reformed the U.S. government, and then rewrote who a former President can be.

From humble beginnings in Georgia, Carter went on to become a Navy officer, nuclear engineer, state senator, governor, POTUS, author, professor, global humanitarian, Nobel laureate, and much more.

He was a true Renaissance Man and in many ways America’s Cincinnatus: Honest, decent, humble, honorable. His kindness, compassion, and humility stand out in our politics today, for obvious reasons.

RIP. Make time for the full NYT obituary (gift link) and to remember his life in January 9th, when President Biden declared a national day of mourning.

Will TikTok survive the new year in the United States?

As long-time readers know, my view is that the Congressional mandate that Bytedance must divest from TikTok or be banned in the U.S.A. because of undisclosed, theoretical national security concerns is a violation of the Constitutional rights of tens of millions of Americans.

This December, a federal appeals court disagreed, finding those concerns credible.

Over the holiday, President-Elect Trump’s lawyers filed an florid amicus brief asking the Supreme Court to stay the date of implementation for the mandate, currently January 19.

Notably, the brief didn‘t take a position on the First Amendment argument that TikTok is making.

The Supreme Court has agreed to hear arguments on January 10th, demonstrating that Chief Justice Roberts can move quickly if he wishes — unlike the turgidity with which the court dealt with Trump’s ahistoric, unconstitutional claims of immunity, denying justice for conspiracy and obstruction.

While I am not a lawyer, I’ve been a First Amendment advocate for over a decade. I bet the Court will have a hard time not finding the law unconstitutional, but they may find a way. If so, beware: the precedent for banning apps, websites, and services for unspecified national security concerns would be a terrible one for the United States to codify and reify in 2025, much less under a more authoritarian President.

Community Notes could make social media better, if adopted outside of X

Billionaire Elon Musk has been having a complicated holiday, as a schism has broken out between far-right nationalists who oppose immigration and hold racist views, and tech CEOs and venture capitalists in Silicon Valley who favor high-skilled immigration, pluralism, and multiracial workforces — if not the government and corporate “diversity, equity, or inclusion” policies MAGA folks abhor.

(My view: A great nation would have a world-class immigration system for legal immigrants seeking sanctuary & economic opportunity. Safe, efficient, understandable, humane. Apply for asylum, find refuge. Enter illegally, get deported. Serve in the military, earn citizenship. Achieve a PhD, earn a green card.)

Yesterday, Musk encouraged people to tweet more positive or informative content, two days after telling folks on the platform “f-ck yourself in the face.”

Good times! Musk will need to be the change he wishes to see in the world, on that count.

What I’m more interested in today, however, is sharing a few thoughts on a genuine innovation that Twitter rolled out years ago, long before Musk bought it and started remaking the company and platform: Community Notes, formerly known as Birdwatch.

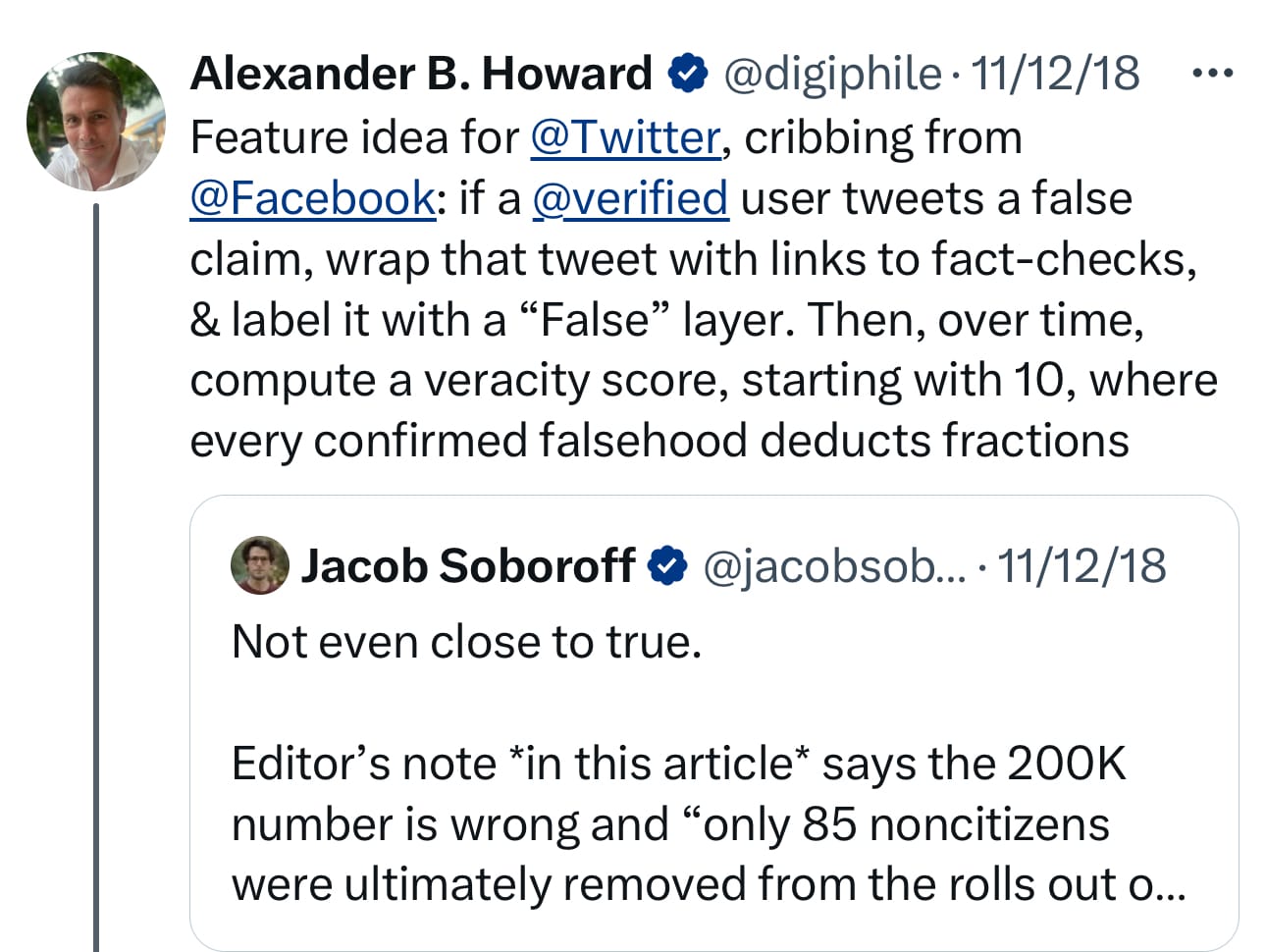

Back in 2018, in the Before Times, I was directly engaged with Twitter staff about improving how the platform handled trust, safety, and integrity issues, given how central it remained to power, politics, and media.

I was deeply frustrated with the lack of transparency in moderation, which I anticipated would be weaponized to support false claims of ideological bias, and the lack of accountability for public figures abusing the platform. I can’t say either were satisfactorily resolved by Twitter.

They tried to label tweets, but that labels failed to effectively fact-check lies about election fraud by Trump. Now, Community Notes are often failing to fact-check falsehoods & conspiracies by Musk and political figures.

X Trust and Safety interstitials that stated the facts first could help by prebunking, but the department is gutted and demoralized — and Linda Yaccarino will never do that for either man.

She has to knows Musk would likely fire her and any staff who prebunked his tweets, much less removed them. He made himself uncancellable and immune to deplatforming, unless an entire country bans X, as Brazil did earlier this year.

What responsible media and tech companies could do here seems clear…as it was back in 2020, when I talked with Brian Fung at CNN for his piece on why Twitter’s labels utterly failed to prevent Trump’s Big Lie about election fraud from spreading and becoming an alternative fact among his supporters.

Cognitively, we're predisposed to believe the things we see first. If you just have a blue link at the bottom that just says something banal — 'learn the facts' — it's not going to work.

A more effective label might be one that masks the media entirely and states the facts first: 'US government election officials from both parties and independent experts all say this was the most secure election in history, with no widespread fraud.'

Any interstitials with prebunks should be more clear, like surgeon generals' warnings on cigarette labels.

When you put pictures of mouth, throat and lung cancer on cigarette packs, that creates a different kind of disincentive than just saying, 'This is known to cause cancer’.”

In 2024, that’s still not happening across Meta platforms, TikTok, X, or YouTube, which has taken the brakes off of accounts that posts videos with conspiracy theories and disinformation — known falsehoods intended to cause harm, division, doubt, and discourse.

In 2025, that could change — but only if technology CEOs reject the falsehood that labeling posts is a form of censorship.

I doubt any will, but it’s a democratic play that balances preserving speech with preserving democratic integrity, versus “fake news” laws that enable countries to censor.

Tech platforms could also display trustworthiness indicators on verified creators' profiles based on their track record of spreading misinformation, parsing out paidchecks and public figures who have no commitment to veracity.

Going forward, technology companies should consider placing the accounts of repeat misinformation peddlers and merchants of doubt, denial, and deception into informational quarantines, where all posts are previewed for policy violations before appearing on social media — not after.

In a world where disinformation researchers and journalists fact-checking posts and rating the (in)credibility of publications is now decried as the “global censorship industrial complex” — and the State Department’s Global Engagement Center was just defunded — implementing such an approach in the United States may be tough sledding for the American oligarchs cozying up to Trump, but we might see continued innovation globally.

Musk has gone on to make all of the issues I’ve described worse, along with new and even more unwise choices around selling verification” as a subscription and incenting folks to share enraging content and misinformation for profit.

He’s also gutted the trust and safety team, depending on crowdsourced fact-checking without the strong backbone of safety officials and support staff, doubling down on the Community Notes approach to mitigate lies and rumors.

It’s not going so well.

A policy that allows all speech short of incitement to violence will mean misogyny, anti-Semitism, racism, xenophobia, & transphobia will run rampant, alongside rumors and conspiracy theories.

Cutting safety capacity will make enforcement moot. Waves of hatred will chill expression, not free it. Tsunamis of bullshit and waves of misinformation will drown truth, not surface it.

Anyone who’s held a town hall, taught a class, moderated a panel, or hosted a book club or union meeting knows strong moderation is critical for protecting everyone’s speech.

Great teachers create healthy forums for speech by setting & maintaining norms. Deft moderation prevents participation from being chilled. Online, it’s even harder.

Musk knows online platforms must moderate CSAM, snuff & torture videos, copyrighted content, spam, & phishing, but it’s easier to farm engagement & mock the adults in the room than to acknowledge the realities of the business he’s running into the ground.

What separates MetaFilter from X, Quora Reddit, Meta, & 4chan is culture, rules & expectations, developed over a long time, & maintained by a community. (Bluesky is taking a hybrid approach to trust and safety that represents its own innovation.)

While Musk continues to claim Community Notes that are “the best source of truth on the Internet,” research has found the majority of accurate fact checks for political posts are never shown.

To put it another way, Notes are failing to provide a meaningful check on misinformation on X — much less the lies, conspiracy theories, and rumors that Trump tweets and that Musk himself has continued to amplify. It’s unfortunate.

I still believe such community annotations are one of the most promising innovations online. Notes show how an online community can add a veracity layer on a social platform, subject to Safety rules and reviews.

While the world obsessed with Musk, Trump, and takedowns, Twitter and then X kept quietly innovating and measuring impact on people encountering false or misleading information.

I was fascinated to be part of the early beta and pilot program. I’m a professional skeptic & retain concerns, but it was inspiring to see a distributed project kinda… work.

This was one of the most promising features & programs Twitter ever launched, after Bluesky, & it kept maturing at a moment when crowdsourced fact-checking is most needed.

Twitter should have done a far better job educating us about @birdwatch & how it works. It reallyshould have educated journalists, many of whom seemed surprised by it in 2022 and did not understand how it works, including when notes are added (user choice) or surfaced (votes).

Thanks to CommunityNotes’ open data & code, independent researchers could study outcomes over time. (The initial opacity around X content & account moderation that followed Musk’s purchase has been followed by more transparency that showed X is censoring more content and taking down more accounts after government demands.)

Studies found tweets with Notes are (organically) retweeted less & that there an 80% increase in deletions after a tweet is Noted.

The expansion of Notes around the world at the same time tweets become editable was fortunate. Said expansion at the same time tweets become editable was fortunate.

Between Notes, Circles, Moments injected into feeds, labels & interstitials, & info centers elevated into search, Twitter was so close to evolving into a different platform when one is needed. Then Musk bought it.

It’s been disappointing (if unsurprising) to see @verified users not delete viral tweets with Notes debunking them.

But without professional oversight and a robust, ideologically diverse, & strong community, a Notes approach can & will go wrong, resulting in public notes with lies, conspiracies, slander, & BS on the tweets of public figures, like Wikipedia entries without editors.

Volunteer-led Community Notes were insufficient to mitigate the spread of election or January 6th denialism, much less state-sponsored disinformation , terrorist lies & propaganda, & or the misinformation associated with war.

That’s where my biggest disappointment lies. Musk’s ideological biases, pathologies, and far-right political preferences create structural conflicts with the practice and professional of fact-based journalism and peer-reviewed science.

But implementation remains uneven on @X, undermined by its owner’s antipathy to journalists who could have been key partners in verifying facts & adding key context, gutting of teams that could have reviewed inaccurate notes.

A tech platform that partnered with news media, hiring and paying independent press and subject matter experts to write and review notes could take this to the next level.

In June 2024, YouTube began testing a version of Notes. A lovely thing about Notes is that the project has been conducted in the open, from source code to data to research. I hope YouTube does the same!

The approach could be adopted, adapted, integrated, & scaled by Alphabet, Meta, Microsoft, Bluesky, just maybe, news media doubling down on the World Wide Web after social media, search, and AI companies keep distribution and discovery in walled gardens.

Hope springs eternal.