Reflections on Meta's Data Dialogue on data sharing

Good afternoon from cloudy DC. I hope you're well. Alex here, with another civic text. To be honest, I'm still grinding my teeth over oral arguments at the Supreme Court, where jurists appear open to the notion that a man can, in fact, be above the law in the United States. You can listen to the audio or read the transcript for yourself before analyses reading the tea leaves or simmering after what we heard.

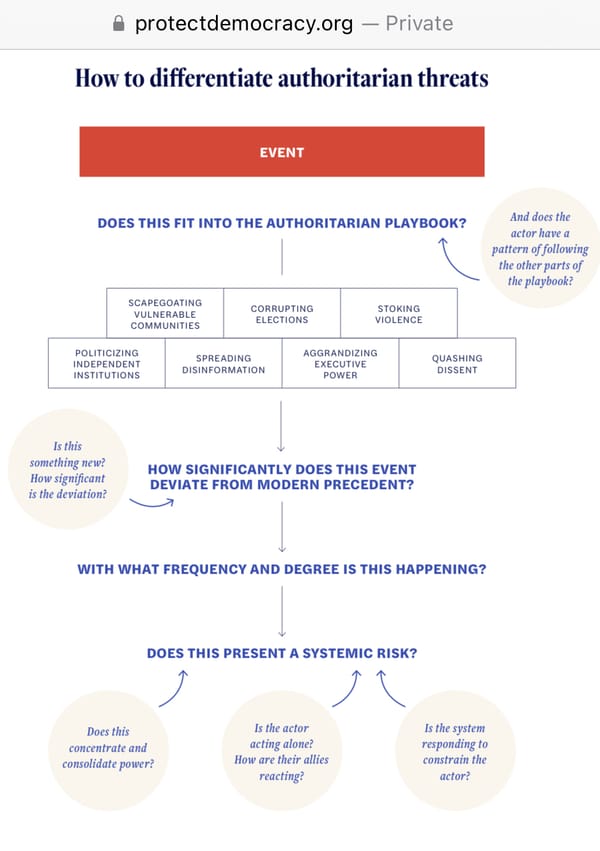

I tend to agree with David Kurtz that the Justices have failed our democracy at the worst possible time by taking this case at all, instead of affirming the lower court's ruling. The Justice Department should be prosecuting a former president who conspired to defraud the United States, attempted an autocoup, and incited seditious mob violence to prevent the transfer of power.

But, here we are.

On to happier matters, like how the world's largest social media platform collects and shares data with researchers – and governments, journalists, regulators, and consumers.

I kid. (Mostly.) Yesterday, I had the opportunity to go back to Meta's offices here in Washington to participate in another "data dialogue," where staff convene various stakeholders for a roundtable and workshop digging into a complex issue that's on their minds.

As with other data dialogues – and far too many White House fora – Meta's event was conducted under Chatham House rules, which allow participants to share what was discussed but not to attribute it.

It's fair to say that my relationship with Meta is complicated. I haven't exactly been quiet about my criticism of Facebook, its policies, or its leadership over the decades as a journalist or good governance advocate.

If a tech company fails to prevent its platform from being used to “foment division and incite offline violence” in a genocide and then allows an attempted putsch to be organized in my neighborhood, I tend to remember.

As I believe it's incumbent on all of us to prevent the worst ideas of the 20th century from continuing to plague humanity in this young millennium, however, I went back to Meta to suggest reforms that could help mitigate the risks to public health, national security, and civic integrity that lie ahead for democracies everywhere – including our own. There's no one coming to move carefully and fix things but us.

So I cycled up Pennsylvania to visit Meta's secured spaces and heard a presentation on data for social good – like the public data about location, mobility, and social connections that they've been publicly disclosing since the program began in 2016 as part of their Transparency Center that's downloaded over 10 million times a year – and non-public data sets that they've been sharing with researchers, many of whom were present from universities across the United States. (I was reminded that targeting data for political ads is not public.)

In 2024, I learned the there are now over 100 organizations across 70 countries who are able to access with data sets through a "virtual data enclave" hosted by the University of Michigan, including a project that disclosed platform data to researchers available through the University of Michigan’s Social Media Archive (SOMAR) to study the effect of Facebook and Instagram on the 2020 election, leading to publications in Science and Nature in July 2023.

Most recently, Meta has launched a new content library and API, in addition to its ad library and influence operations, which will extend beyond English content in the months ahead.

The key points of discussion that followed focused on who should be eligible to have access to data, under what conditions data should be deleted, liability or indemnity for use or misuse – reflecting layers of lawyers involved, naturally – and consent for co-owned data. (Eligibility for access to Meta Content Library and its API will be non-commercial won't be limited to academic researchers. It's in English now, but will be more languages.)

We also talked about who is responsible for data use, misuse, or abuse. To some extent, the answer was everyone involved: the platform, staff, researchers, and host institutions. The tension between privacy and transparency was present throughout, given the risk of re-identification of people within data sets.

I participated in a short workshop on how platforms should assess the prevalence, scale, and impact of election-delegitimizing language, which is a matter of considerable public concern in 2024.

During the workshop, I raised the paired issues of authenticating official accounts of politicians, campaigns, and governments by default to mitigate the risks of manipulation through impersonation – as opposed to charging for checkmarks – preserving official statements and electioneering by default in a public file, adopting more transparency and accountability in content moderation, in order to create collective facts about suspensions, removals, labels, or limits to reach based upon violations of civic integrity policies instead of ideological animus.

After we broke, we all had an opportunity to make asks of Meta. The one that came up repeatedly was a request to keep CrowdTangle operating through mid-2025, not shut it down in August.

For my part, I asked Meta to be more open and transparent and explicitly take a pro-democracy stance with respect with democracies, not remain "neutral" towards authoritarian regimes that undermine them or attempt coups.

That might not maximize shareholder value every quarter, but it's a responsible bet for a company that has aggregated so much power over what billions of humans watch, read, hear, and share every day.

Thanks to everyone for reading, subscribing, and your continued support.